Interactive Image Processing Graphs

Here you can explore a few Desmos graphs featuring gamma correction (using power law) and Linear Contrast Stretching.

Interactive Image Processing Graphs

Here you can explore a few Desmos graphs featuring gamma correction (using power law) and Linear Contrast Stretching.

Interactive Image Processing Graphs

Here you can explore a few Desmos graphs featuring gamma correction (using power law) and Linear Contrast Stretching.

Here you can explore several Desmos graphs featuring distributions, permutations/combinations, and regression. For the best experience, view these graphs on a computer rather than a phone.

Here you can explore several Desmos graphs featuring distributions, permutations/combinations, and regression. For the best experience, view these graphs on a computer rather than a phone.

Here you can explore several Desmos graphs featuring distributions, permutations/combinations, and regression. For the best experience, view these graphs on a computer rather than a phone.

Simple Java Neural Network

This project was developed as a demonstration for an undergraduate machine learning course at MSU Denver. This project allowed students to take an in-depth look at what goes into the implementation of a neural network. All of the code is in a single file for ease of use and portability. That file with the source code can be found on Github here. There are three classes in the file:

-

A Testing Class:

-

The Testing class contains examples of creating and training a neural network.

-

-

A Neural Network Class:

-

The NeuralNetwork has everything needed to create, define, and train a neural network. There are a couple of limitations to keep the implementation more simple. For instance, there can be any number of hidden layers but all hidden layers must have the same number of nodes. All layers use the sigmoid activation function and there is no way to change this.

-

-

A Matrix Class:

-

The matrix class contains simple methods that allow for some specific matrix operations. All "matrices" are simple 2D double arrays.

-

A simple example

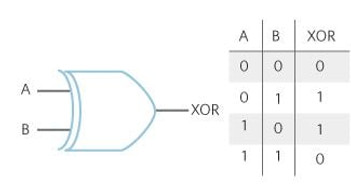

Let's look at a very simple problem that we can solve with a neural network. We will consider the XOR (exclusive or) problem. XOR is a logic gate with two inputs and one output. The inputs and outputs are either true or false,1 or 0 respectively. Below is the gate diagram and the truth tabel.

We will use the value pairs of A and B as the features and the output as the targets for the neural network. The features and targets can be defined in the following way.

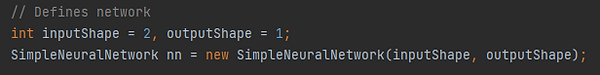

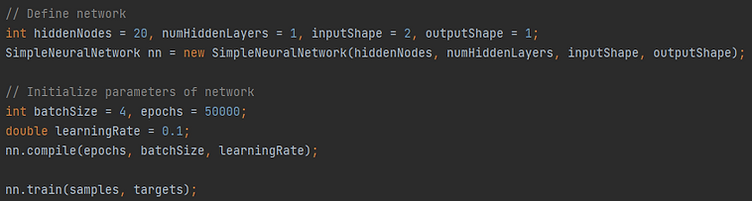

We can then define the neural network we want to fit to this data. We need two input nodes (one for A and one for B) and one output node. We will start by not using any hidden layers.

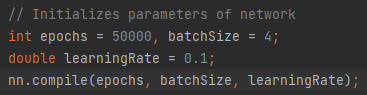

Once the network is defined, we can prepare it for training. We will use a learning rate of 0.1, train the network for 50000 epochs and use a batch size of 4.

Now the nerual network is ready to be trained.

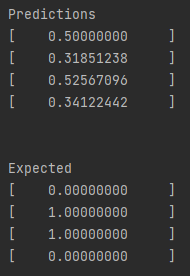

We can print out the predictions on all the features and the expected outputs and compare them.

As we can see, the network is not doing a very good job at making predictions for this simple example. This is because the XOR problem is not linearly separable. That means if we plotted the (A, B) inputs as ordered pairs, there is no way to separate the trues and false cases with a straight line. Neural networks with no hidden layers can not solve this type of problem. So let's try again but add a single hidden layer with 20 nodes. We will use the same parameters for everything else as before.

Now that we have this new network with one hidden layer we can print out the predictions and the expected outputs.

This looks a lot better. If we rounded each prediction to the nearest integer we would have the correct predictions. In our case, that is exactly what we want. Note that this neural network has no optimizations with gradient descent and does not have bias terms, so it takes a lot of hidden nodes and a lot of epochs to get somewhat decent results. As such, this neural network should be used for educational purposes only and should not be used to solve complex problems.